The Challenges of Kubernetes on Bare Metal

- Kiera Quinn

- -

- Bare Metal Automation

Across the industry, platform teams are under pressure to deliver Kubernetes clusters directly on bare metal hardware. This pressure often comes from above. Executives want improved performance, lower cost, and full control over infrastructure for workloads like AI training, virtualization, and edge computing. The appeal is understandable, but implementation is harder than it seems.

Many of these teams are well-equipped. They have a lot of Kubernetes experience. What they haven’t encountered before is the absence of abstraction. Cloud platforms and virtualization layers have trained a generation of engineers to expect infrastructure to behave predictably. Bare metal doesn’t.

Despite Kubernetes’ growing maturity, running it on physical servers remains a specialized skill. Not because the orchestration itself is harder, but because physical infrastructure demands a kind of operational rigor that virtual environments don’t.

The Assumptions Don’t Hold

When Kubernetes was built, it borrowed heavily from the assumptions of cloud-native design. Nodes are disposable. Networks are programmable. Machines are interchangeable. Scaling means adding more of the same.

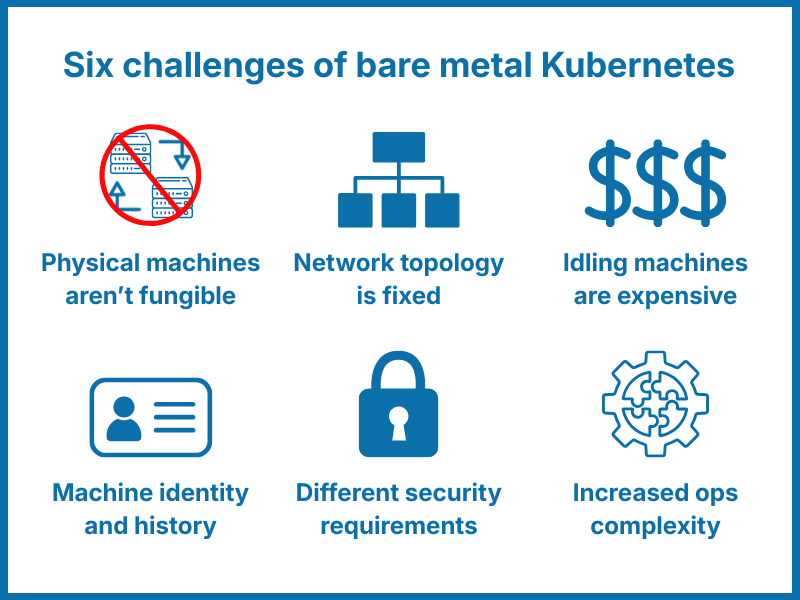

On bare metal, each of these ideas encounters friction. Hardware has identity and history. Cables and switches don’t auto-heal. Scaling out can involve forklifts and power budgets. These realities are not bugs in the design; they’re properties of the physical world. And teams who try to ignore them encounter delays, instability, and unnecessary costs.

As seen in our survey results on on-prem Kubernetes, the shift from cloud patterns to bare metal requirements includes operational complexity that’s often underestimated.

The Practical Challenges

Physical systems require more than inventory tracking. They demand active management with firmware updates, BIOS settings, secure boot configurations, and vendor-specific tooling all accumulating into maintenance requirements. The moment a node fails, the rebuild process is no longer just a matter of restarting a virtual instance. Instead, it’s a coordination of power, provisioning, and networking across a constrained topology.

Consider networks. In cloud environments, topology is abstract. On-prem, it is an asset. Kubernetes does not natively understand where your top-of-rack switches are or which interface leads where. When deploying AI workloads with InfiniBand or ViM storage systems, physical layout isn’t just a detail. It’s a dependency.

Storage, power, cooling, latency. Each of these has a footprint that cannot be hand-waved away. In fact, one of the key takeaways from our CEO Rob Hirschfeld’s conversation with TFIR is that bare metal environments succeed only when operational tooling takes physical constraints seriously from the start.

Lifecycle Automation Becomes Critical

In cloud environments, provisioning is a matter of API orchestration. The infrastructure is abstracted, and the state of the machine is largely immaterial. On bare metal, that model does not apply. Each server has a physical history, and that history affects behavior. Lifecycle automation needs to account for this.

Initial provisioning is only the beginning. Systems must be reimaged, repurposed, patched, and eventually decommissioned, all while staying consistent across different hardware vendors and versions. This includes managing details that cloud-native pipelines never encounter, like BIOS versions, RAID configurations, MAC address binding, and vendor-specific firmware processes.

None of these details are part of Kubernetes, but all of them must be handled cleanly for the cluster to remain functional. Without full lifecycle automation pipelines, teams need to maintain fragile manual scripts and workflows, slowing down lifecycle operations and decreasing reliability.

Why Bother With Bare Metal Kubernetes at All?

Despite these challenges, teams continue to build Kubernetes clusters on bare metal because certain workloads demand it. GPU-intensive training pipelines. Low-latency data processing. Edge environments where space and bandwidth are limited. In these cases, it becomes necessary.

What RackN has seen repeatedly is that bare metal Kubernetes projects fail not for lack of talent, but from a mismatch between the expectations of the platform and the capabilities of the infrastructure. Success comes from aligning the tools and practices with their environment.

Looking Ahead

As AI and virtualization drive demand for on-prem performance, more teams will consider bare metal deployments. Those that succeed will do so by rethinking automation and respecting the hard limits of hardware.

Kubernetes is not incompatible with bare metal. But it requires clarity, patient process design, and systems that account for the complexity, rather than masking it.

If your teams are working in this space, or planning to, we can help. Our automation platform, Digital Rebar, was purpose-built to solve these infrastructure problems. We believe bare metal deserves the same automation discipline as the cloud. And we’ve spent years proving that it’s possible.

Schedule a demo today to provide your physical infrastructure with the comprehensive automation it needs to make on-prem Kubernetes efficient and streamlined.