Why Bare Metal Governance Matters More Than Virtualization Strategy

- Kiera Quinn

- -

- Bare Metal Automation, Virtualization

Most virtualization initiatives do not fail because of the wrong platform selection. Instead, they fail because the underlying infrastructure cannot perform consistently when under pressure. Platform teams usually find this out too late. It’s going to appear after pilot projects stall, rebuild attempts are unsuccessful, or performance becomes unpredictable. By that time, the focus has already shifted to blaming the virtualization stack.

Virtualization platforms highlight existing issues; they do not resolve them. If infrastructure operations depend on undocumented fixes, manual runbooks, and exception-driven processes, the platform will quickly reveal these weaknesses. In the past, VMware accepted this condition, but modern platforms exposed it much sooner. The key difference is not the software’s maturity but the tolerance for discrepancies.

This is why a platform-first mindset often leads teams into recurring cycles of failure. Without disciplined governance of bare metal infrastructure, no virtualization strategy can succeed at scale. The platform serves as a messenger, not the root cause of the problem.

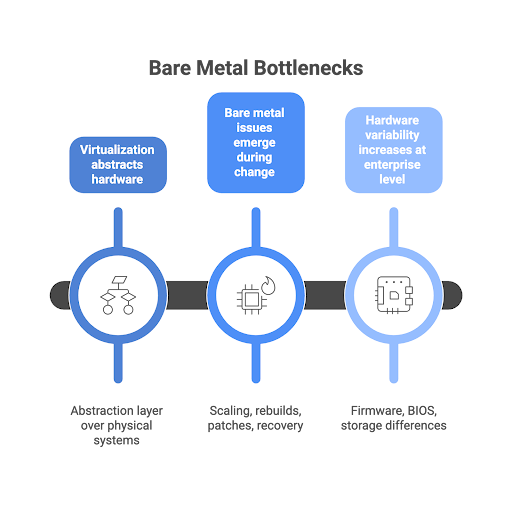

Why Bare Metal Is Always the Bottleneck

Virtualization abstracts hardware; however, this abstraction doesn’t eliminate dependency. Every virtual machine (VM), container runtime, and cluster scheduler still relies on physical systems that must boot predictably, offer consistency, and rebuild reliably each time. When this foundational layer lacks discipline, the entire platform becomes unstable.

Bare metal issues don’t suddenly manifest during routine operations. They typically emerge during moments of change, such as scaling events, rebuilds, patch cycles, and recovery scenarios, which put stress on the assumptions platforms make about hardware behavior. When these assumptions fail, operators may encounter issues that seem sudden but are actually rooted in long-standing inconsistencies.

At the enterprise level, hardware variability increases significantly. Even minor differences in firmware, BIOS settings, or storage configurations can lead to unpredictable behavior. Platforms do not create this variability; rather, they expose it when consistency is essential.

The Hidden Cost of Hardware Drift

Hardware drift doesn’t occur as a single failure; it accumulates quietly over years of operational shortcuts. For instance, a BIOS setting might be adjusted to resolve a performance issue, a firmware update could be applied to one node during an outage, or a RAID layout might be modified to meet short-term requirements. While each change addresses a local problem, it weakens overall consistency.

In many environments, there is no reliable system of record for the hardware state. Teams often assume that similarity exists based on server models or purchase orders, without verifying the actual configurations. As long as workloads remain stable, this assumption seems valid. However, when platforms require repeatable rebuilds, this illusion collapses.

Historically, VMware has managed much of this inconsistency. Its operational model allowed teams to postpone governance decisions while ensuring uptime. This flexibility shaped expectations. However, as organizations move toward immutable infrastructure or automated cluster management, those postponed decisions resurface as obstacles.

Ultimately, drift is not merely a compatibility issue with the platform; it represents governance debt becoming apparent.

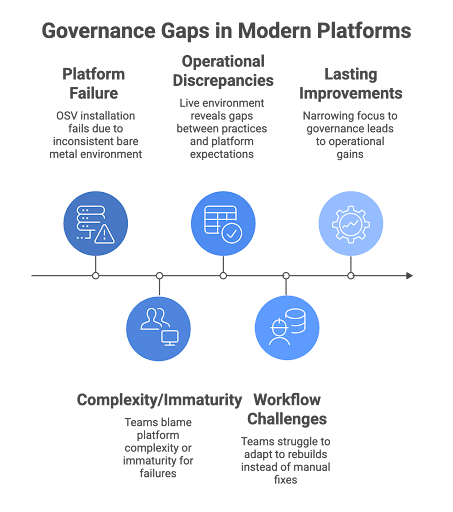

Why Modern Platforms Expose Governance Gaps Faster

Modern virtualization and Kubernetes platforms assume that infrastructure behaves deterministically. They expect nodes to rebuild cleanly, offer identical capabilities, and adhere to declarative configurations. These assumptions do not create fragility; rather, they enforce discipline.

Day 0 or Day 1 installation failures on platforms like OpenShift Virtualization (OSV) often lead teams to blame the issue on complexity or platform immaturity. The real question: “Why is the platform requiring and enforcing standards that the bare metal environment has not consistently upheld?”

What we discover in the live environment is discrepancies between operational practices and platform expectations. There’s friction because it challenges established workflows. Teams that are used to repairing systems manually must now adapt to platforms that require complete rebuilds rather than simple fixes. That process pain and team discomfort comes from gaps in governance rather than the choice of technology.

Failures seen as flaws in the platform can lead to cumbersome workarounds and fragile automation. This is where narrowing focus to governance issues can lead to lasting operational improvements.

The Myth of Platform-First Modernization

Platform-first modernization can be wrought with assumptions. If the platform teams assume that selecting the right virtualization stack will impose order on the environment, things won’t end well. There are persistent assumptions that feel like strong beliefs because platforms promise consistency, automation, and control. In reality, platforms only apply those properties to inputs that already behave predictably.

Platform teams that succeed with new platforms share a common trait. They invest in infrastructure discipline before migration. They enforce hardware lifecycle control, validate rebuilds, and eliminate drift. The platform then operates as intended.

Platform and virtualization teams that struggle are usually following the opposite path. They introduce a new platform into an unmanaged environment and expect improvement to emerge organically. Instead, they encounter stalled pilots, brittle installs, and growing operational resistance.

Platform changes magnify operational maturity. They do not create it.

The Role of Automated Conformance

The earlier sections establish a clear pattern: platforms fail where infrastructure lacks discipline. At this point, the natural question is how teams enforce discipline at the bare metal layer without increasing operational overhead. The answer is automated conformance.

Governance Requires Enforcement, Not Intention

Governance collapses when it relies on human memory and best intentions. Teams can document standards, publish runbooks, and hold design reviews, but without enforcement, environments drift. Automated conformance replaces intent with verification. It defines what “correct” means and continuously validates that systems remain in that state.

Instead of assuming compliance, automated conformance proves it. BIOS settings, firmware versions, storage layouts, and boot configuration stop living in spreadsheets or tribal knowledge. They become an enforced state.

Why Rebuilds Become the Truth Test

Rebuilds force infrastructure to reveal whether governance exists. In unmanaged environments, rebuilds feel dangerous and disruptive. Teams avoid them, which allows drift to accumulate unchecked.

With automated conformance, rebuilds serve a different purpose. They validate that the infrastructure behaves predictably from a zero state. When rebuilds succeed repeatedly, confidence replaces caution.

How Compliance and Conformance Changes Daily Operations

Once enforcement is in place, operational behavior naturally changes. Teams stop repairing systems manually and start rebuilding them confidently. Drift stops accumulating because deviations fail validation early. Most importantly, operators regain trust in their infrastructure.

The result is not just cleaner systems, but calmer operations.

Governance Outcomes With and Without Conformance

We usually witness consistent differences in operational results, whether bare metal governance includes automated conformance.

| Area | Without Automated Conformance | With Automated Conformance |

|---|---|---|

| Rebuild Safety | High risk, avoided | Routine, predictable |

| Drift Detection | Reactive, manual | Continuous, enforced |

| Day 0 / Day 1 | Fragile installs | Repeatable installs |

| Operator Confidence | Low | High |

This transitioning from fragile, exception-driven operations to enforced consistency sets the stage for broader stability gains.

How Hardware Lifecycle Discipline Improves Stability Everywhere

Once automated conformance is in place, its benefits extend beyond any single platform decision. Hardware lifecycle discipline begins delivering value immediately, even before organizations change virtualization strategies.

Immediate Impact on Existing Environments

VMware environments gain consistency and stability, reducing performance variance. This makes capacity planning more accurate and reduces the length of maintenance windows by reducing the number of unknown factors. Importantly, all these improvements can be achieved without migrating to new platforms; they stem from eliminating hardware-level uncertainty.

This represents a big inflection point for bare metal and datacenter operations. You get the value of cloud-like governance without requiring complete transformation of your team.

Why Pilots Stop Stalling

When teams later evaluate OpenShift Virtualization or Kubernetes on bare metal, the experience changes dramatically. Installers stop failing unpredictably. Nodes behave consistently across rebuilds. Platform evaluation becomes a technical decision instead of an operational risk.

Governance does not guarantee success. It removes unnecessary failure modes that previously obscured the signal with noise.

Stability That Travels With the Infrastructure

Perhaps most importantly, lifecycle discipline makes stability portable. Infrastructure no longer resets to chaos when platforms change. Teams can run parallel environments, test alternatives, or adopt future platforms without rebuilding their operational foundation each time.

At this stage, infrastructure shifts from being a liability to becoming an asset.

Why Vendor-Neutral Governance Must Live Below Platforms

As stability improves across environments, another principle becomes clear: governance must remain independent of platforms to preserve flexibility. This realization naturally leads to vendor neutrality.

Neutrality Is an Architectural Decision

Vendor neutrality doesn’t emerge from licensing terms or purchasing strategy. It emerges from architecture. When lifecycle control embeds inside a single platform, infrastructure inherits that platform’s constraints, assumptions, and upgrade cadence.

Placing governance below platforms avoids this coupling. Infrastructure behaves consistently regardless of which virtualization or orchestration layer sits on top.

The Hidden Cost of Platform-Coupled Control

Platform-managed lifecycle control quietly reduces freedom by:

- Turning infrastructure upgrades into platform upgrades

- Limiting hardware choice to platform support matrices

- Binding operations to a single vendor’s roadmap

Optionality as an Operational Outcome

Vendor-neutral governance provides flexibility, allowing teams to run VMware today, evaluate OpenShift Virtualization tomorrow, and prepare for future platforms without reworking hardware processes. This stability ensures infrastructure remains consistent while platforms evolve. Flexibility indicates control, not indecision.

Ultimately, this vendor-neutral approach unlocks the primary benefit: Optionality as an Operational Outcome.

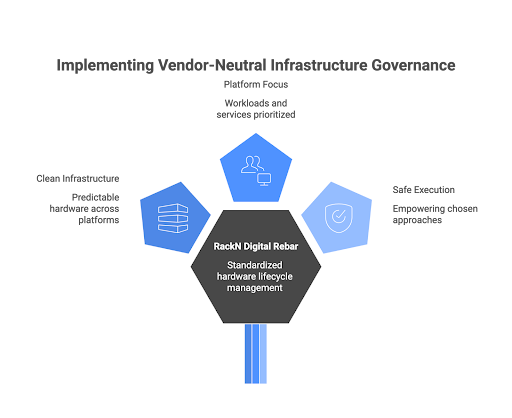

How Teams Implement Vendor-Neutral Infrastructure Governance

At this point, the theory is clear. The remaining question is practical: how do teams implement governance below the platform level without creating another silo or toolchain?

Governance Below, Platforms Above

Successful organizations separate concerns deliberately. They enforce hardware lifecycle discipline once, across all platforms. Platforms inherit clean, predictable infrastructure instead of redefining it.

This separation allows platform teams to focus on workloads and services while infrastructure teams retain ownership of physical systems.

Where RackN Fits

RackN offers a practical approach for organizations to implement a standardized hardware lifecycle management model through its RackN Digital Rebar platform. This platform operates independently of any virtualization environment and ensures compliance before any platform installations. It continuously validates, rebuilds, and maintains consistency across different environments.

RackN supports various virtualization platforms, including VMware, OpenShift Virtualization, KubeVirt, and other platforms like Kubernetes on bare metal, without displaying any bias. It does not force organizations to migrate or follow specific strategies; instead, it empowers teams to execute their chosen approaches safely.

By instilling discipline at the infrastructure level, RackN transforms platforms into effective tools rather than potential risks.

Conclusion: Govern the Metal Before You Choose the Platform

A strong virtualization strategy can’t compensate for inadequate hardware governance. Platforms amplify the discipline in the underlying infrastructure. When governance is weak, platforms tend to fail early and visibly. Conversely, when governance is strong, platforms function as intended.

Establishing strong bare metal discipline is important for successful modernization. It stabilizes existing environments, accelerates pilot projects, and maintains vendor flexibility. Organizations that prioritize fixing governance regain control, while those that neglect it often repeat the same failures with each new platform.

If you want your virtualization efforts to succeed, start by ensuring strong governance over your hardware. If your teams are facing issues like fragile installations, stalled pilot projects, or risky rebuilds, the root of the problem likely lies beneath the platform. Assess your bare metal readiness, identify any lifecycle gaps, and standardize your hardware governance before embarking on your next VMware optimization or OpenShift Virtualization pilot.

Consider reaching out to RackN to learn how RackN Digital Rebar can help teams enforce infrastructure discipline, eliminate drift, and build virtualization strategies on a solid foundation.